Apple’s dictation feature is a high-tech marvel that allows users to seamlessly convert speech into text. But how exactly does it work? Understanding the mechanics behind this technology is key to dispelling myths and misconceptions about its functionality.

At its core, Apple’s dictation functionality is powered by advanced speech recognition algorithms and machine learning models. These models are trained on vast amounts of linguistic data, enabling the system to recognize and transcribe spoken words with remarkable accuracy. However, like any technology, it has its limitations and occasional errors.

When a user speaks into their iPhone, their voice is processed through a series of complex steps:

- Audio Capture: The iPhone’s microphone records the speech, converting sound into digital data.

- Speech Processing: The data is analyzed by Apple’s speech recognition software, which breaks it down into phonetic components.

- Pattern Matching: The algorithm compares the phonetic input with known words and phrases from its training dataset.

- Contextual Analysis: The system uses surrounding words and grammar rules to improve accuracy and select the most likely interpretation.

- Text Conversion: Finally, the processed text appears on the screen, reflecting the recognized speech.

Apple’s dictation feature is designed to handle a wide variety of accents, pronunciations, and speech patterns. However, certain words or names might sometimes be misinterpreted due to factors such as phonetic similarities, background noise, or individual speech variations.

One of the biggest challenges in voice recognition is distinguishing between words that sound similar. For example, words like “there,” “their,” and “they’re” are often confused by both humans and machines. The same principle applies to names and proper nouns, especially those that may not be as frequently spoken in Apple’s training data.

Unlike a voice assistant like Siri, which sends data to Apple’s servers for more complex processing, dictation often processes speech locally on the device through on-device learning. This means that the recognition accuracy may differ from situation to situation and improve over time based on user input.

Although Apple’s dictation feature is incredibly advanced, it is not immune to errors. The occasional misinterpretation of words or names does not indicate bias—it simply reflects the inherent limitations of AI-powered speech recognition.

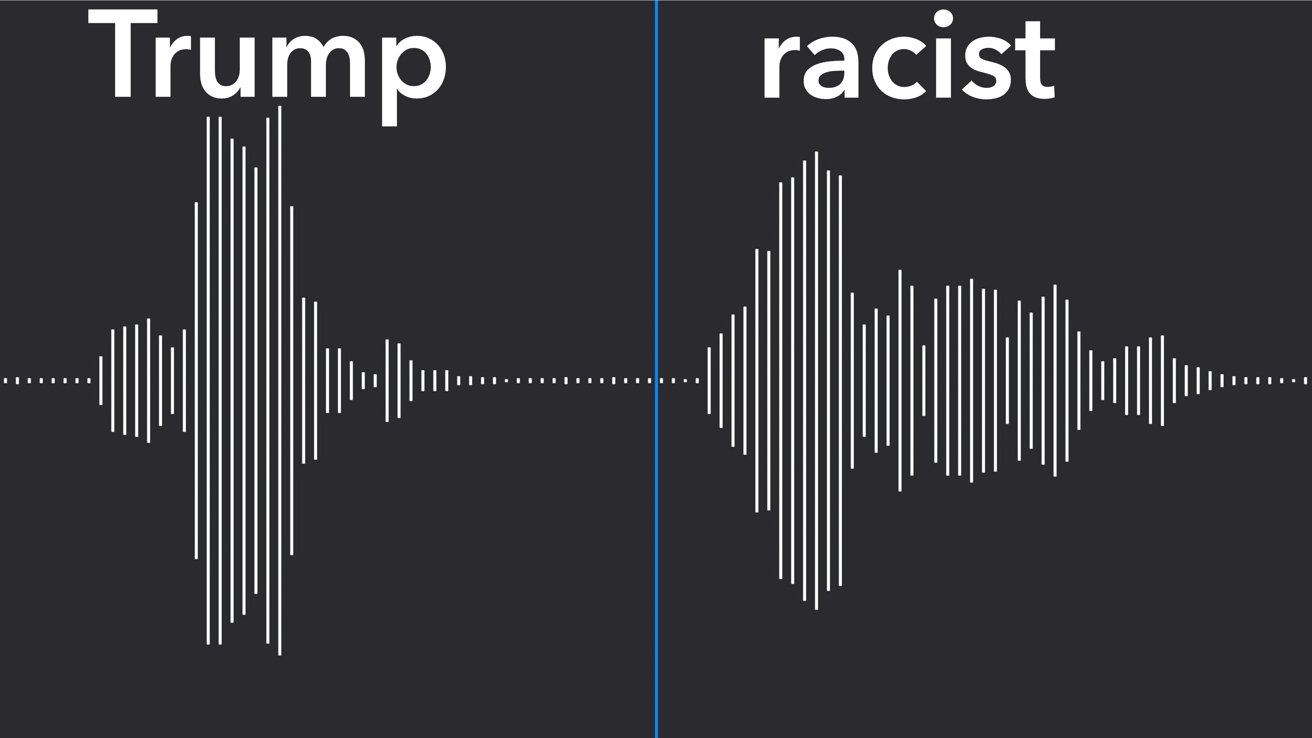

The recent controversy surrounding Apple’s iPhone dictation feature began when some users noticed that saying the word “racist” during dictation would sometimes briefly generate the word “Trump” on their screens before correcting itself. This sparked a wave of online discussions, with some individuals interpreting this as a deliberate act of political bias or even as a form of subliminal messaging.

Social media platforms quickly became the battleground for the debate. Numerous users posted videos and screenshots showing the purported issue, with some claiming that Apple had intentionally programmed the dictation feature to associate Trump’s name with the word “racist.” Others, however, were more skeptical, asserting that the phenomenon was likely a glitch in the system rather than a deliberate political statement.

Such claims gained traction in politically charged corners of the internet, where discussions around Silicon Valley’s perceived biases against conservative voices are frequent. Some conspiracy theories even emerged, suggesting that Apple was secretly manipulating public perception through its technology—a claim that has no basis in fact.

However, voice recognition experts and AI specialists provide a more grounded explanation. The misinterpretation of words in speech-to-text technology is not new and has been observed with various names and phrases over the years. Phonetic similarities between words, the way machine learning processes spoken input, and the context in which words are commonly used all play a role in occasional mistranscriptions.

Dr. Emily Zhang, an AI researcher at MIT, explains, “Voice recognition algorithms rely on probability and pattern matching. If two words share phonetic characteristics and frequently appear in related contexts, the system may briefly confuse them before self-correcting. This is not evidence of a political agenda—it’s just how AI learns through data.”

Similar issues have cropped up before with other AI-driven dictation tools and even predictive text features. Certain words that often appear near each other in digital discourse may unintentionally influence the way language models function. Given the frequent discussions around Donald Trump and topics related to racism in recent years, it is not entirely surprising that such a linguistic overlap could manifest in an unintended way.

Another factor influencing this specific misinterpretation is the evolution of Apple’s machine learning model. Voice recognition technology is constantly adapting as it takes into account new language patterns. This means that if words like “Trump” and “racist” have appeared in close proximity in online text, news articles, or even conversations, the algorithm might unintentionally link them in ways users wouldn’t expect.

Ultimately, this incident underscores the challenges in perfecting voice recognition technology, especially in politically sensitive environments. This situation demonstrates that while these systems are impressive, they remain works in progress—constantly learning and improving but still prone to occasional inaccuracies due to the vast complexities of human language.

Given the strong emotions surrounding political figures and the increasing reliance on technology in everyday life, it’s understandable why some users might jump to conclusions about Apple’s dictation feature. However, claims of intentional bias or conspiratorial motives quickly fall apart under closer examination.

One of the main arguments put forward by those alleging bias is that Apple’s speech-to-text function must have been programmed to make this connection deliberately. This assumption, however, misunderstands how machine learning algorithms operate. These systems do not carry human-like biases in a purposeful or strategic way—they simply analyze data patterns and attempt to predict outcomes based on previous user interactions.

As AI expert Dr. Michael Levin explains, “People sometimes assume AI functions with intention or motive, but really, we’re dealing with probabilities and contextual learning. If two words are frequently found together in media and user-generated content, an AI model may recognize their association without understanding meaning in a human sense.”

Another cornerstone of conspiracy claims is the idea that Apple—or broader tech corporations—are engaging in political censorship or social engineering. This belief is fueled by higher levels of distrust in tech companies, particularly among certain political groups. However, there is no credible evidence supporting the notion that Apple would risk alienating a large segment of its user base through such a subtle and ineffective manipulation tactic.

Apple, like many other tech giants, has faced criticisms in the past regarding algorithmic discrepancies, but its primary business goal remains maximizing user experience and trust. A misinterpretation of speech input would harm the user experience rather than serve any meaningful purpose in shaping opinions, making such speculation implausible.

There are also historical precedents for dictation mistakes that have affected other public figures and widespread words. Previous reports have highlighted how other high-profile names, when said under particular conditions, have been mistranscribed due to phonetic similarity to unrelated words. Such occurrences have not been labeled as politically motivated but rather as byproducts of AI’s natural learning process.

Additionally, the subjective nature of perception plays a role in fueling these theories. If a person already suspects that tech companies are biased against a particular political figure, they may be more likely to interpret innocent technical errors as deliberate actions. Psychologically, this is known as confirmation bias—the tendency to interpret new evidence as confirmation of one’s preexisting beliefs. In other words, people see what they expect to see rather than considering all possible explanations equally.

A more constructive approach to these concerns would be greater public education on how AI-driven dictation functions. Rather than assuming bias, users should be encouraged to report issues, seek expert opinions, and consider the broader technological context. By understanding that speech recognition software is neither perfect nor politically driven, we can shift the conversation from suspicion to productive discussion about how to improve such technology moving forward.

At the heart of the iPhone’s dictation feature lies a continuously evolving machine-learning model that adapts based on patterns of human speech. These models are designed to recognize common words and phrases based on linguistic patterns observed across large datasets. However, the way AI systems process language can lead to unexpected associations, as seen in the controversy surrounding the brief substitution of “Trump” when users dictate the word “racist.”

Machine learning models function by analyzing vast amounts of text and spoken language data, identifying correlations, and using probability to determine the most likely words spoken. This means that if two words frequently appear together in online discourse, news articles, or conversations, the model may establish an implicit association between them. It’s not that the AI “believes” these words are connected in a meaningful way—it simply observes patterns and attempts to predict language accordingly.

The issue of unintended word substitutions is not new in speech recognition technology. Similar incidents have occurred with other AI-powered keyboards, voice assistants, and language models. For example, predictive text algorithms in messaging apps have mistakenly suggested words that fit the surrounding context but were not intended by the user. Such inaccuracies arise from the intersection of probability-based learning and the imperfections of natural language processing.

To further understand how linguistic data influences these models, it’s helpful to examine how AI language processing works:

- Data Training: AI-driven dictation models are trained on text sourced from books, articles, conversations, and online content, processing vast amounts of language data to recognize common speech patterns.

- Phonetic Similarity: Words that sound alike (such as “Trump” and parts of “racist”) can sometimes be misidentified as the same, particularly in noisy environments or with variations in pronunciation.

- Contextual Probability: The system predicts words based on their likelihood of appearing together, which can sometimes lead to unexpected but statistically explainable errors.

- Self-Correction Mechanism: AI models often refine their output in real-time, meaning that initial mistakes—such as “Trump” flashing briefly before being replaced with “racist”—can self-correct in fractions of a second.

These misinterpretations are not evidence of bias but rather byproducts of language modeling mechanics. In the case of Apple’s dictation feature, the brief appearance of “Trump” is a result of how AI systems process phonetics and word relationships, rather than a deliberate attempt to mislead users.

A key factor here is that public figures often have names that appear in numerous linguistic contexts. In online discussions, for example, some words may frequently appear in proximity to specific names due to the nature of political discourse and media coverage. If Trump’s name has been used in broader discussions surrounding race, the AI may have registered a statistical overlap between those terms in its training data—even without any explicit programming or intentional bias.

In a broader sense, this case reaffirms that AI-driven speech recognition still has limitations. While Apple and other tech companies strive to refine these technologies, the complexity of human language ensures that occasional mistakes will occur. It is an ongoing challenge for developers to balance accuracy while preventing unintended associations, particularly when language use is constantly evolving.

Understanding how AI systems learn and process language helps dispel unfounded fears about political or societal manipulation. Rather than viewing these errors as acts of deliberate programming, they should be seen as opportunities to refine speech recognition technology and improve its accuracy. By recognizing the technical aspects behind word recognition models, users can have a more informed and reasonable perspective on the challenges inherent in AI-driven language processing.

Apple has responded to the controversy by clarifying that the misinterpretation of “Trump” in dictation was not intentional and did not stem from bias. Instead, the company pointed to the complex nature of machine learning algorithms as the root cause and confirmed that they are actively working on refining the accuracy of their voice recognition system.

In a statement, Apple acknowledged the concerns raised by users and reiterated their commitment to providing a neutral and reliable dictation experience. “Our speech recognition technology is designed to adapt and improve over time based on user interactions. Occasionally, unintended associations may arise due to phonetic similarities or contextual probabilities, but we continuously refine our models to ensure accuracy and neutrality,” an Apple representative explained.

To address the issue, Apple has already begun implementing updates to its dictation function. These updates involve optimizing the machine learning model that powers voice recognition by:

- Enhancing Phonetic Differentiation: Apple is fine-tuning how their AI interprets similar-sounding words to prevent unintended name associations.

- Expanding Training Datasets: The company is incorporating more diverse speech patterns, accents, and pronunciations into their training process to improve recognition consistency.

- Adjusting Contextual Mapping: Apple is refining how the AI determines word relationships based on context, reducing the occurrence of words being unintentionally linked.

- Monitoring User-Reported Issues: The company encourages users to report errors in dictation so that patterns can be identified and proactively addressed in future software updates.

This proactive approach underscores Apple’s broader effort to improve artificial intelligence while avoiding linguistic misinterpretations that could unintentionally spark controversy. The company has experienced similar challenges in the past with AI-driven predictive text and autocorrect functions. In each instance, Apple has swiftly worked to analyze and correct unintended errors through system updates.

Experts in voice recognition have welcomed Apple’s quick response, highlighting the importance of continuous AI refinement. Dr. Rachel Morgan, a computational linguist at Stanford University, noted, “Speech recognition models are inherently dynamic, meaning they need constant exposure to new data and linguistic trends. Apple’s commitment to improving its dictation feature is a step in the right direction, ensuring that technology better serves users without unintended bias.”

Apple’s focus on improving dictation accuracy also aligns with the broader tech industry’s ongoing efforts to make AI-based language tools more reliable and inclusive. Other companies, including Google and Microsoft, face similar challenges in ensuring that their speech-to-text services minimize recognition errors while adapting to the complexities of human communication.

Moving forward, Apple remains committed to refining its AI systems to avoid misinterpretations like this one. They have assured users that additional updates will be rolled out as necessary to further improve how the dictation feature processes speech, demonstrating that their priority is to maintain a dependable and unbiased digital assistant experience.

Ultimately, this response highlights an important reality of AI-driven technology: While these systems are becoming more advanced, they are not infallible. Companies must continue updating and fine-tuning them to enhance accuracy. By acknowledging and addressing concerns promptly, Apple is reinforcing its pledge to keep its technology fair, efficient, and evolving with user needs.